Blog

6 min

A Child Development Approach to Human-in-the-Loop Coding With AI

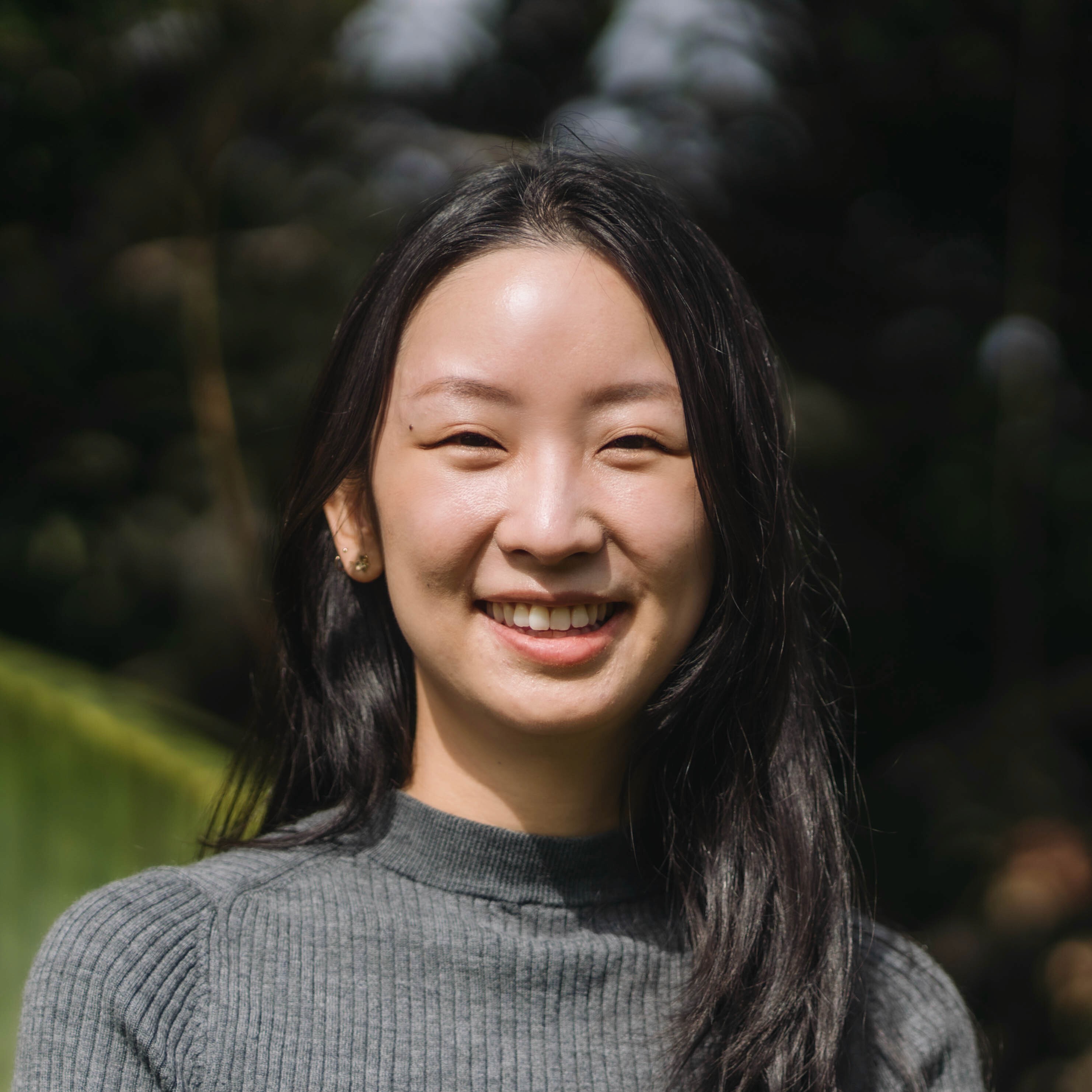

Eric Skram

Co-Founder and Senior Staff Engineer

Jul 10, 2025

Recent advances in generational AI are driving one of the biggest shifts ever in how software is built and deployed. As a company on the forefront of engineering operations, Tempest has been all in on that shift, and we’re pleased to share some of the learnings they’ve had on the way. Here, Co-Founder and Senior Staff Engineer Eric Skram reflects on the surprising parallels between being a parent and working with AI, and how behaviors he learned while raising his son helped him get better results from AI coding assistants.

I used to treat an AI coding assistant like a vending machine. Feed it a perfect prompt, hope the snack drops, curse when it jams, and start over. The cycle felt brittle and glacial—hardly the speed-of-thought magic the hype promised.

After too many late-night retries, I stumbled on an unlikely mentor: my two-year-old son. Guiding him through language, blocks, and the fine art of tying your shoes showed me a better way to guide a large language model.

Parenting, it turns out, is an ideal blueprint for co-programming with AI.

From one shot frustration to gentle parenting

Looking back, my early workflow mirrored an anxious new parent who expects a toddler to nail every developmental milestone on the first try. I agonized over phrasing, fired a single request at the model, and binned the code when it hiccupped. It worked eventually. But it was slow going trying to code this way, and worst of all: it wasn’t very fun.

Those failures weren’t the model’s fault. They were mine. I was withholding feedback, the raw fuel any learner needs. When my son tries a new word and doesn’t quite nail the pronunciation, I don’t shove a dictionary at him and walk away. I correct the syllable and let him know once he gets it right, praising him for making the effort the whole time. Software, surprisingly, responds to the same gentle course-correction.

I began treating the AI like a curious kid: expect missteps, correct them quickly, and capture the lesson. The change felt small—just a tweak in tone—but the impact was huge.

Now, when the assistant misuses an SDK or confuses an auth flow, I don’t scrap the session. I say, “Good try. Here’s the mistake. Please fix it and write a rule so you never repeat it.” The model answers with a short markdown file of self-authored guidelines, stores it, and re-reads those guardrails on the next task.

This isn’t real learning in the neurological sense; it’s closer to taping a reminder note on the fridge. But the illusion of memory is enough. My toddler doesn’t actually memorize every correction either. He recalls patterns because we practice them in context. The assistant does the same, consulting its growing rulebook whenever I call for a new snippet.

At first the model needed lots of nudges—just like my son asking “Why?” ten times in a row. But a funny thing happened as the rulebook thickened: output quality leapt.

Eventually I was easily generating new Tempest integrations in Go in a single shot… despite not being a native Go coder.

Why? Because each tiny correction removed an entire class of future failure. Once the assistant understood things like why a JSON tag must match a struct field, it stopped guessing. Those lessons compounded until I could “one-shot” a feature again, this time with confidence it would pass unit tests.

Why iterative feedback beats perfectionism

This parenting-inspired loop does more than save keystrokes; it reshapes the developer’s job. I used to spend 60 percent of my time typing and 40 percent thinking about product and architecture. With an assistant that iterates in seconds, the typing portion is shrinking to zero. That frees me to refine specs, design cleaner APIs, and chase the “taste” questions no model can answer—Does this feel good? Is it delightful?

Speed matters outside my IDE as well. When a team can validate an idea in the first 10 percent of the schedule instead of the last 60 percent, product risk plummets. You ship fewer “first pancakes” and spend more cycles on flavor instead of raw batter.

Practical steps to raise your AI right

A quick recap, distilled into parent-tested habits you can start today:

Expect mistakes and plan for them. Open every session assuming the first draft will wobble. That mindset removes frustration and primes you to give constructive feedback.

Correct, don’t restart. Highlight the single error, explain why it’s wrong, and ask the model to fix it. Once the fix is working, have it document what it did with and generate a rule prohibiting that mistake. Treat retries like practice reps, not new games.

Store the rulebook close to the code. I keep the markdown file in the project root where the assistant can “read” it before generating anything new. The proximity reinforces good habits.

Automate the yardstick. Pair the rules with unit tests, linters, or type checks so the assistant sees objective pass/fail signals without waiting for you. Kids learn faster when they get instant feedback; models do too.

Celebrate small wins. When the assistant nails a tricky compile or cleans up ESLint errors on its own, give positive reinforcement. It may seem weird, but there’s some evidence to suggest that politeness and encouragement lead to better outputs. A quick “Great job—run the full suite next” can boost compliance in subtle ways.

Adopt these five and you’ll notice the ratio of supervision to autonomy tilting in your favor.

Beyond coding: the human payoff

The parallel goes deeper than productivity metrics. Parenting teaches patience, empathy, and the art of breaking complex ideas into bite-sized lessons. Those same skills make you a better teammate. Colleagues who struggle with tooling often need the identical approach: clear examples, immediate feedback, and guardrails that let them explore safely.

An AI assistant, used this way, becomes a rehearsal space for leadership. You practice giving precise instructions, spotting root causes, and nudging rather than dictating. The machine never rolls its eyes, so you can iterate on your communication style until it lands with real humans.

Closing the loop

My son still struggles sometimes, and the AI still forgets an edge case now and then. But our household—and my codebase—runs smoother because I stopped demanding perfection and started teaching process. Children thrive on secure boundaries and consistent feedback. So do language models. Treat your AI like a toddler who wants to help, and you’ll get cleaner commits, faster prototypes, and a lot more fun along the way.

In other words: parent your tools, not just your kids. The lessons carry over, and the rewards compound.

Share